[Shameless Plug Warning] :

You have until August 31st, 2017 to try out NetMon and participate in LogRhythm’s Network security contest. Win up to $18,000 when applying your scripting skills to detect network vulnerabilities. See https://logrhythm.devpost.com/ for more information.

Updates

April 23rd, 2014: Added the ideone examples to GitHub: https://github.com/KjellKod/blog_linkedlist_vs_vector

Java Battle Royal: LinkedList vs ArrayList vs DynamicIntArray

My obstinate anti-LinkedList blog entry which turned into a CodeProject article recently took some heat from a former college, David.

I think his objections were smart, to the point and important. Many of his objections came up earlier at CodeProject but instead of expecting the reader to read through all the comments there and here I decided to take a different approach. Instead of doing an easy-to-miss reply in the comments I decided to do a blog sort-of reply here. I am sure many has the same objections and questions as the ones earlier asked, and it is good to bring them into the light (questions + people ;). This blog post will highlight some issues that were not clearly explained or could be misinterpreted.

Some cut and paste from the reply gives a nice picture of what we should all strive for:

David wrote:

What you should do is to look at your problem and create the most suitable solution for it

[…]

I would try to encourage them choose the correct one [kjell: data structure choice] for the specific problem! […]

Well put. I could not agree more.

Some of the irate comments I have received I can sympathize with. My anti-LinkedList entry can be argued used somewhat contrived examples, even if I actually used real-life examples that I have encountered.

David was so kind as to suggest a few examples when LinkedList should shine. Let us look at them and compare. Let us also quickly do a recap of what the article showed.

[ Rationale ]

On algorithms: Doing performance testing on data structures is tricky. If your algorithm is faulty, working against the nature of the structure or has logical errors you can get bad performance even if the produced result is correct.

Looping through a LinkedList using index, or through an ArrayList using iterator are both examples of working against the nature of the structure. Both will also have a sad impact on performance. In the testing below such against-the-nature approaches are called “naive“.

Such a naive approach is probably taken by mistake, by laziness or by lack of knowledge : it might just be that it is pseudo intuitive for coders who have not bothered to understand nor read up on how the structure works. A more sad reason for choosing a naive approach is when the coder follows the rule of premature pessimization.

On performance: The Java code snippets performance testing were mostly done at ideone.com. The performance testing is a bit sketcy. Should it be done cold or warm? Playing with repeating gave sometimes the following result

Run 1: fast

Run 2: slower

Run 3: fastest so far

This is a Java optimization artifact and whether justified or not I decided to run the tests cold.

[ Let it begin ]

Thanks to David’s and @rc’s comments I can soon update the Java section on the article. At the time of writing the Java section is just simply wrong. They [David and “rc”] (aware or not) kindly helped me to understand how to squeeze out the cache boosted performance out of Java’s ArrayList and how a wrong use of it and LinkedList will just give horrible performance for both.

David wrote:

When using LinkedList you should be using it the way it’s [supposed] to be used, e.g. _not_ using indexes. It is, as the name suggests, a _linked_list_ and behave really bad using indexes to find elements

[…]

Huge blunder by me. Of course a LinkedList should use iterators instead of calculating the indexes. That approach was born out of a desire to be generic. It very much fits my own description of naive and I was happy to correct it. Thank you for pointing it out.

When treating both structures right, and comparing them in the original scope the ArrayList still shines over LinkedList.

[ Silly “insert sorted” to prove a point ]

Correcting the code according to David’s example fixed the code and for a while LinkedList rocked in comparison to ArrayList. This just did not make sense to me and I decided to skip being naive and read up on ArrayList. This resulted in simple changes that lead to a huge performance boost for ArrayList.

For performance reasons traversing the ArrayList should use the random access lookup and being treated in function calls as an ArrayList and not as a List (my bad). It will remove some from being generic and it will hurt the object oriented fanatics but it will squeeze out that cache-friendly nature of it that we want.

I.e.an example of when ArrayList is unnecessarily slowed down because it is iterating as a List and not an ArrayList. The ArrayList loop will be roughly twice as fast as the List loop

.

// SLOWER: as shown in http://ideone.com/1wnF1

private static void linearInsertion(Integer[] intArray, List<Integer> list) {

[...]

int list_size = list.size();

for (int i = 0; i < list_size; i++) {

if (integer.compareTo(list.get(i)) >= 0) { // ... more code

..

// FASTER: as shown in http://ideone.com/JOJ05

private static void linearInsertion(Integer[] intArray, ArrayList<Integer> list) {

[...]

int list_size = list.size();

for (int i = 0; i < list_size; i++) {

if (integer.compareTo(list.get(i)) >= 0) { // ... more code

.

The only difference between the snippets above is that the ArrayList is at top iterated through the List interface and in the second it is iterated through the ArrayList interface. The difference in performance is huge. So almost identical code, for the same structure is behaving radically different. If you would use iterator instead of random access lookup then the ArrayList would perform even worse. Please see this reference at Oracle for more information.

[ DynamicIntArray ]

In Java the C++ std::list equivalent is called LinkedList. The C++ dynamic array std::vector is in Java called ArrayList. There is one big difference between these libraries, both the Java LinkedList and ArrayList can only contain objects. This means that the boost of adjacent memory will be somewhat less for an ArrayList containing Integer objects. To access and compare values for the search there is one extra indirection, from reference to value.

To see what difference that made for ArrayList I threw in a DynamicIntArray to get a truly cache friendly structure to compare with. The DynamicIntArray use int, not Integer objects.

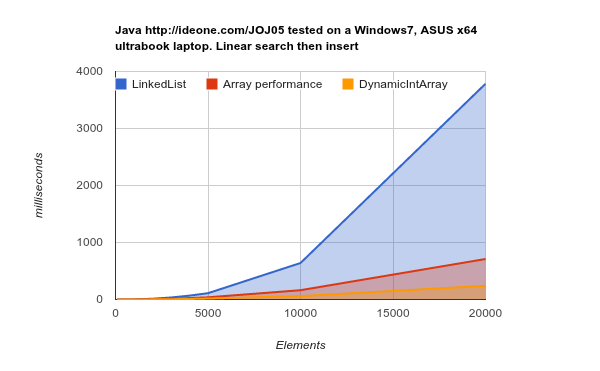

From: http://ideone.com/JOJ05 Linear search, then insertion to prove a point. Times are in microseconds [us] #Elements LinkedList ArrayList DynamicArray 100 47 (157%) 39 (130%) 30 (100%) 200 177 (197%) 128 (142%) 90 (100%) 500 1074 (205%) 802 (153%) 524 (100%) 1000 4527 (233%) 2756 (142%) 1946 (100%) 2000 21782 (259%) 10873 (129%) 8405 (100%) 3000 49707 (232%) 26284 (122%) 21468 (100%) 4000 91929 (238%) 46914 (121%) 38652 (100%) 5000 141390 (246%) 75871 (132%) 57452 (100%) 10000 628813 (258%) 317474 (130%) 243352 (100%) 20000 3125540 (322%) 1319927 (136%) 971471 (100%)

With the corrections in place the LinkedList showed immediately that it was performing worse than the ArrayList. For the test on ideone.com (http://ideone.com/JOJ05) the LinkedList was performing from a factor of 1.5 to a factor of 3 slower then the best array container.

On my x64 ultrabook the cache architecture is different and the cache friendly structures got a nice boost. The LinkedList was then a factor 1.5 to factor 15 slower then the DynamicIntArray for the same range of elements.

Integer object slow down for ArrayList

On ideone.com the extra indirection overhead of using Integer objects instead of int was roughly a 30% slow down for ArrayList in comparison to the faster DynamicIntArray. On my x64 laptop the ArrayList took roughly twice (200%) as long time to finish as the DynamicIntArray (still faster than at ideone.com)

What this scenario really shows is that the overhead of array shuffling, creating larger arrays when needed and copying content to the larger array is not that expensive performance wise. At least in comparison with traversing a disjoint memory structure with lots of cache misses, lots of cache loading and slow RAM access to get what to load.

What this scenario shows is that the sheer amount of the operations needed to find and do an insert in the middle of a dynamic array plays mind tricks with people. LinkedList insertion is: search time + O(1). ArrayList insertion is search time + O(n). Unless you have the pointer in advance the LinkedList search time is excruciatingly slow

I.e. there are lots and lots of cache misses when traversing a LinkedList. This means idle CPU cycles. Which is expensive. Which means that that even though more data has to be shuffled the adjacent memory structures ArrayList and DynamicIntArray win in this silly scenario.

I.e. search time + O(1) is slower than search time + O(n). What does this mean?

[ O(1) beats O(n), or does it? ]

David wrote:

Cache is a faster memory, period, nothing else and it certainly doesn’t make O(n) go O(1).

Yes. Exactly. Or. Kind of.

Cache is much faster then disjoint memory traversal (lots of RAM fetching). However doing the big O comparison is just pointless. Let me be repeat this: It is pointless to compare algorithms that way.

Please, everyone who reads this. Be extremely careful when juggling Big O – you are on a slippery slope if you use it for drawing conclusions of which container or algorithm to choose. If comparing algorithms and containers beware for drawing conclusions like X with O(n) is slower then Y which has O(1). Or statements such as Y with O(n log n) beats Z which runs in O(n2).

Big-O notations tells nothing about how one algorithm fare against another algorithm. Big-O will only tell you how performance will degrade as n increases.

In my CodeProject article I wrote about this:

Kjell wrote:

“[…] for a given problem set these mathematical models are completely and utterly false“

This might be clumsily written out of frustration. The mathematical models are accurate but are so severely abused in the computer science area that I consider them [for coders use] to be flawed and dangerous.

Remember this from the linear search, then insert example?

LinkedList insertion is: search time + O(1).

ArrayList insertion is search time + O(n).

In the silly example “search then insert” the search complexity for both was O(n). But the search time for LinkedList grows rapidly, at first seemingly near exponential up to a certain level.

[ Almost but not quite, exponential increase for linked-list ]

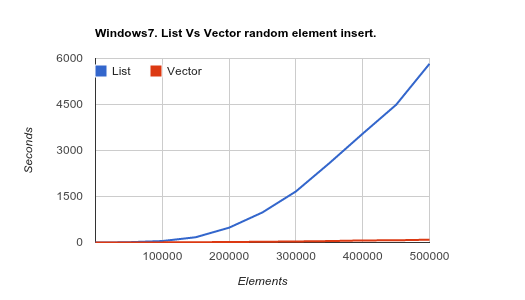

C++ std::vector vs std::list for linear search then insert of random values.

Windows7. The linked-list insertion time grows at an alarming rate, almost with an exponential look

C++ std::vector vs std::list for linear search then insert of random values.

Here on Ubuntu it is very clear how the curve levels off.

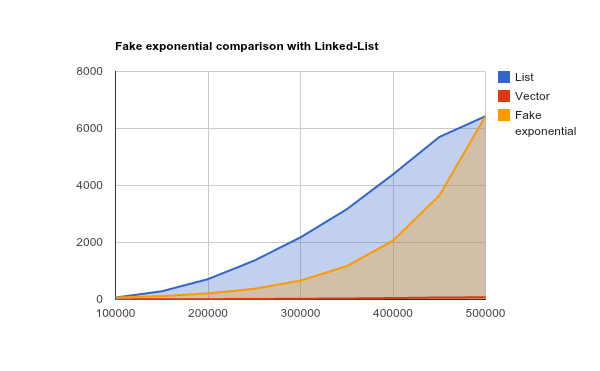

y = a(1+r)ˆx

No. It is not exponential. So that formula won’t work

If monitoring the numbers closely you would see that the exponential growth formula does not work. The growth factor decreases and eventually it clearly levels off. At these levels the time usage for linked-list is absurd but I thought the fake exponential display would be good for clarity. The linked-list search time does not grow exponentially, it is just excruciatingly slow from the get-go.

[ The most common operation on a data structure ]

The article got a response “6 good reasons to always use linkedlist” from steveb. He and David makes similar observations

steveb wrote:

“[If] Your insertion is always done [at] the index 0 or somwhere in the middle. No matter how large list grows the operation perfomed in constant time O(1). With vectors it is O(log(n)). The larger the array the slower the insertion.”David wrote:

“[A] very common example when adding elements to the beginning a list. Using a LinkedList this would be a fairly simple operation (just swapping some pointers) while the ArrayList would struggle to make such insertion (moving the entire array)”

Copy and pasting from my own article comment answer to steveb:

“What you wrote above is completely accurate. You are not the first to mention this and while it is so right it is also so wrong. Let me explain.

The scenario you outlined is very believable. In fact putting one piece of data in front of an older piece of data and so forth is perhaps the most common task for data collecting?

In this scenario it makes sense to do insert at “index 0” for the linked-list. This does not makes sense for the vector.

The default insertion on vector should be done by push_back. Insertion in the direction of growth. An obvious choice to avoid shifting the whole previous section. This is made abundantly clear in C++ where the std::vector[^] have push_back, and insert but lacks completely the function push_front

So, let us not be silly [naive] here, right? Doing push_back on the vector instead and comparing that to insert at index 0 on the linked-list that is probably the valid options a programmer would choose from. Don’t you agree?

push_back on the vector does not need to shuffle everything for every new element. The overhead when the vector is full and need to be re-sized still applies.”

A code and performance comparison between these three insertions can be seen at http://ideone.com/DDEJF. It compares between the common linked-list operation : insert at “index 0” vs std::vector push_back vs a naive std::vector “push_front".

From: http://ideone.com/DDEJF. Times are given in microseconds [us] elements, list, vector, vector (naive) 10, 3, 6, 1 100, 7, 3, 13 500, 43, 7, 83 1000, 70, 10, 285 2000, 149, 27, 986 10000, 928, 159, 22613 20000, 1578, 284, 97330 40000, 3138, 582, 553636 100000, 7802, 1179, 3555756 Exiting test,. the whole measuring took 4266 milliseconds (4seconds or 0 minutes)

Ignoring the “naive” push_front. Showing Linked-List insert at first position vs std::vector insert in its direction of growth with push_back

[ Applying a filter to a set of values ]

David wrote: [in regard to finding an example where linked-list would rule]

[..] to filter an array of elements, removing the values that are above or below a threshold, perfect for an linked list (http://ideone.com/KM5Ev)! And wouldn’t those examples be “Number crunching problems”?

Yes. Definitely in the number crunching area. Let us take a look at that then. Remember that we are trying to be realistic here. The naive approach might be interesting but will not prove anything. Let us look at how we can apply both structures at their best to solve the filtering problem.

Printout from : http://ideone.com/NH6jl

Filter containers with random 1.000 numbers Performance is measured in microseconds [us] Naive ArrayList 1200 Less naive ArrayList 691 Davids 'set/replace' ArrayList 805 LinkedList 1221 Smart ArrayList 278 Smart DynamicIntArray 403 All containers filtered equally: true Filter containers with random 100.000 numbers Performance is measured in microseconds [us] Naive ArrayList 2230954 [working against] Less naive ArrayList 1074467 [working with, but performance ignorant ] Davids 'set/replace' ArrayList 3504 [smart in-place algorithm ] LinkedList 6229 Smart ArrayList 3471 ["simple/smart", wanted values --> to work copy] Smart DynamicIntArray 2363 ["simple/smart", wanted values -->to work copy] All containers filtered equally: true ************************************** Another view Elements Davids Arraylist LinkedList copy ArrayList copy DynamicArr NaiveArrayList LessNaiveArrayList 10 21 129 24 21 29 14 1.000 805 1221 278 403 1200 691 100.000 3504 6229 3471 2363 2230954 1074467

For small range of values, 10 – 1000, even the completely naive array-based solution is just as fast or faster then the Linkedlist. This means that for smaller ranges the cache friendly array structure is much more forgiving. It is not too bad to use a completely naive solution and still beat or come in split position with LinkedList.

After 1.000 elements the naive array-based solutions pay the price. For the more sensible array-based solutions the relative order of effectiveness seems to be pretty much the same from 1.000 elements up to 100.000. I.e. the array-based solutions beat the LinkedList solutions with factor ranging from 1.5 up to 4. Maybe not that much difference but still, remember it is the battlefield chosen to favor the LinkedList.

[ What about sorting? ]

The initial “linear search, then insert” should of course never be thought of as a optimal way of sorting a data structure. It is in fact an extremely slow way of sorting. Not in my wildest dreams have I even considered the possibility that anyone would consider my example as a suggestion for sorting. Please do not do that. It is there to prove a point. Nothing else, nothing more.

For both linked-list and the dynamic array the initial “sort” example is very ineffective, but the point was made!

Sorting is interesting. How will the LinkedList fare against array-based structures when it comes to sorting? By now the answer should be clear, but let us verify it first, shall we? But the algorithm for sorting one data structure will not be effective on another. Here we initially trust the Java (and C++) libraries to be as effective as they can for each data structure.

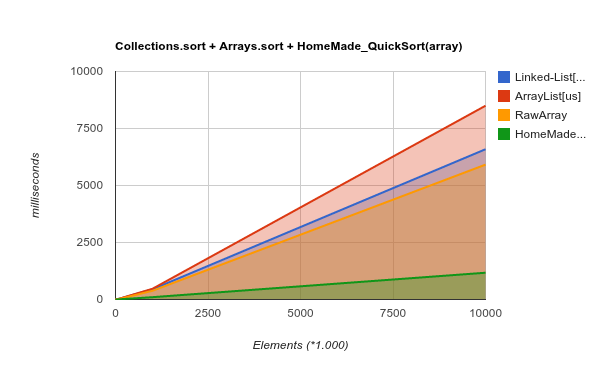

Sorting the data structures using the Java Collections.sort(…) for ArrayList and LinkedList and Arrays.sort(…) for the raw array gave the following.

Java sort examples from http://ideone.com/0l8Ct Elements Linked-List[us] ArrayList[us] RawArray 10 150 (938%) 128 (800%) 16 (100%) 1.000 3406 (1577%) 1628 (754%) 216 (100%) 10.000 6360 (215%) 4757 (161%) 2954 (100%) 100.000 56693 (125%) 54353 (120%) 45378 (100%) 1000.000 949816 (123%) 1054523 (136%) 774160 (100%)

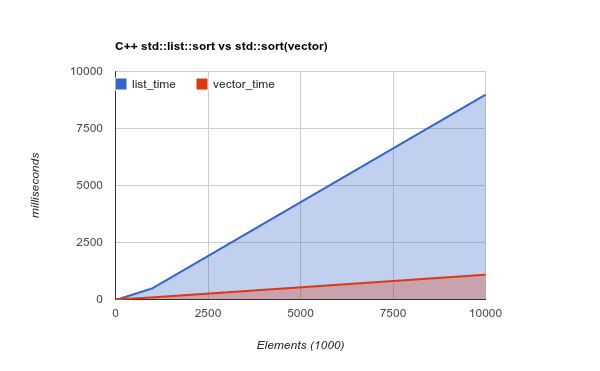

For me, this is uncharted territory. I read somewhere that Collections.sort would dump the list into an array and then sort it. I read somewhere else that Arrays.sort(raw_array) would use QuickSort but that Collections.sort(LinkedList) or Collections.sort(ArrayList) would use MergeSort. Whatever the case was I could compare their performance related to each other, and expected to see similar figures as when I compared C++ std::list::sort and std::sort(vector) (http://ideone.com/3eouT).

As n grows the C++ sorting of the array became more effective compared to the std::list, not less as shown in the Java sort example above.

C++ sort as shown in http://ideone.com/3eouT ********** Times in microseconds (us) ********** Elements SORT(List, Vector) elements list_time vector_time 10 5, (250%), 2(100%) 1.000 139, (239%), 58(100%) 10.000 1823, (270%), 674(100%) 100.000 26167, (324%), 8058(100%) 1000.000 507663, (519%), 97794(100%) 10.000.000 9331131, (831%), 1122050(100%)

Why did the Java sorting relative performance not follow the C++ example as the numbers went up? It is still somewhat of a mystery but I got closer to the answer when I just had to take care of a must-try “itch” that I scratched by trying out Quicksort on the DynamicIntArray.

C:\temp>java -Xmx756m Main . Times in microseconds. Same as http://ideone.com/0l8Ct but with a home wrought QuickSort (nothing special). Collections.sort on Linked-List and ArrayList. Arrays.sort on RawArray Home made QuickSort on RawArray Elements Linked-List ArrayList RawArray HomeQuickSort(rawArray) 100 513 245 123 1417 1.000 1503 1193 387 458 10.000 4173 3795 2819 863 100.000 32468 33854 24440 9193 1000.000 448278 470919 382999 101954 10.000.000 6589875 8498179 5914902 1174076

Putting this into a chart instead put mayhap things into perspective.

This relationship between DynamicIntArray/QuickSort and the rest looks almost identical to the C++ std::list vs std::vector sorting.

The Arrays.sort and Collections.sort(ArrayList) follow, surprisingly, very closely the Collections.sort(LinkedList).

I seriously doubt that my QuickSort is that effective, the explanation lies elsewhere. I.e. to me it seems like the positive cache effects seems to be missing from Arrays.sort and Collections.sort(ArrayList) while the cache friendliness of the array is showing for the DynamicIntArray/QuickSort.

But hey, I am Java naive so maybe it has a natural, very obvious explanation that is escaping me. Maybe delving into this mystery will be the subject for a future blog entry?

[ Conclusions ]

Any new conclusions to draw from all this testing? Well, choosing the right algorithm for the task is of course of paramount importance. And choosing the right data structure goes hand in hand with that.

The CPU-cache architecture plays a huge importance of course. On ideone.com the cache performance boost is not that significant. On a modern x64 ultrabook laptop the boost is so much more. If anything the use of ideone.com in this blog entry downplays the relative performance boost of cache friendly structures vs cache hostile structures (linked-list). Please try out the ideone.com examples yourself, at ideone.com and on your computer. Notice that your computer will (likely) show much larger difference – favoring the ArrayList and DynamicIntArray.

In the area of number crunching you will be hard pressed to come up with realistic scenarios where LinkedList with its disjoint memory beats array-based contiguous memory structures. Even for the LinkedList favored scenarios it was dead simple to beat it performance wise.

LinkedList has its uses. It is not horrible to use LinkedList,. but make no mistake about it. In the area of number crunching there is one who almost always rule. The Array is King.

Hmmm, absolutely not.

It calls exactly the same methods of ArrayList, whatever the apparent type of the variable is.

Ah, I check your full code,

more codeis not the same in the two cases 😉No problem. I realize that section was a confusing and it was easy to miss why it was slower. Thanks to your comment I have updated the section

[ Silly “insert sorted” to prove a point ]. I think you should find it clearer now.

Pingback: Big-O FTL? – Skittish Sloth